Hi Phil. Welcome to our User Forum!

We recently wrote a paper about DINED Mannequin:

Huysmans, T., Goto, L., Molenbroek, J., & Goossens, R. (2020). DINED Mannequin. Tijdschrift voor Human Factors , 45 (1), 4-7.

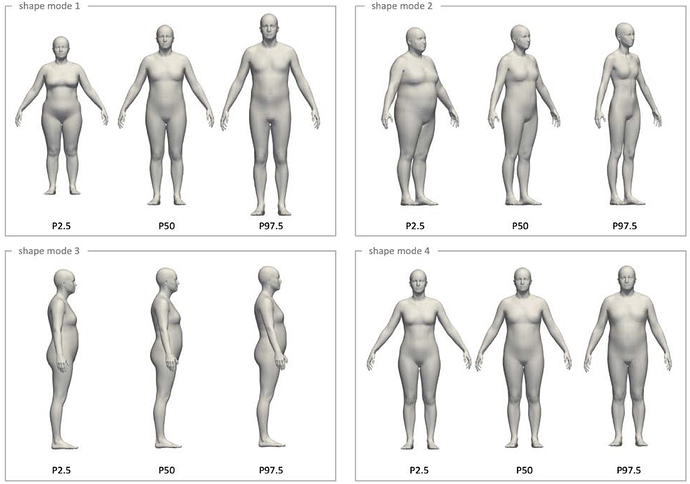

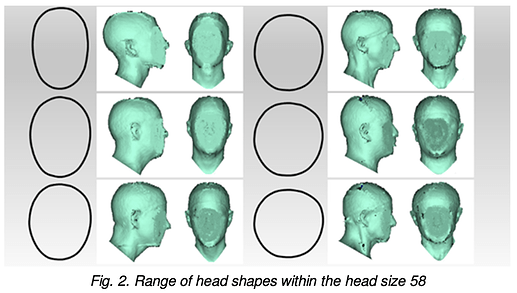

In short, when you select a number of measures in the Mannequin tool, we calculate a linear regression between the values of those measures for each subject in the database and the shape coordinates of the 3D scans of those subjects. Shape coordinates are a compact set of numbers (40) that describe the body shape by making use of dimensionality reduction techniques. The shape coordinates replace the description of the 3D mesh point coordinates, which are thousands of coordinates, with a much lower number, typically a few tens. These shape coordinates represent typical shape modes present in the data, in decreasing degree of importance. See this figure (from the paper above) for a visualisation of the first few shape modes:

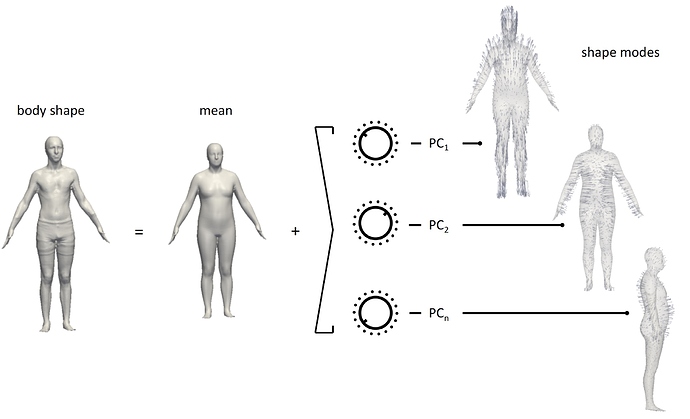

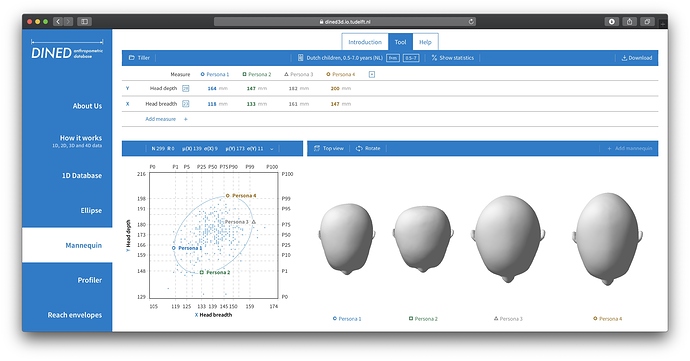

In that way, we build a linear model expressing 3D shape in terms of 1D measures. When you enter values in Mannequin for the persona, then we use the linear regression model to go from the measure values to the shape coordinates. These shape coordinates are then used to recreate a 3D shape. Creating a 3D shape from a set of shape parameters (shown as dials) is illustrated here:

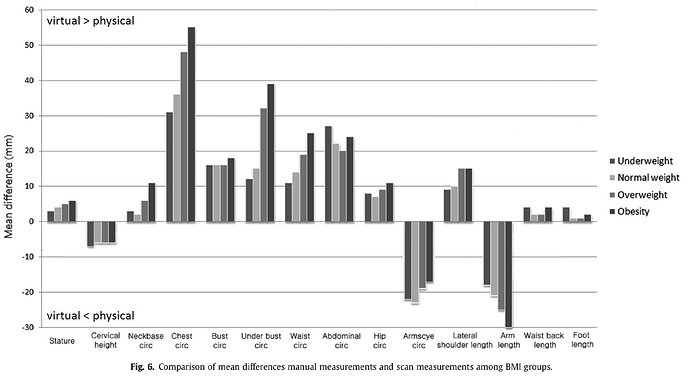

If you were to digitally measure the measures on the resulting Person 3D shape, then you may see that these are not always in line with the values that you entered. The reason is twofold: (1) the linear model may not be able to capture the relation between the measures and the 3D shape (We plan to explore using non-linear models in the future) and (2) the measures may have been physical measures and these can be different from digital measures as e.g. explained by this paper:

Han, H., Nam, Y., & Choi, K. (2010). Comparative analysis of 3D body scan measurements and manual measurements of size Korea adult females. International Journal of Industrial Ergonomics, 40(5), 530-540.

I hope this makes the internals of Mannequin a bit more clear.

Kind regards,

Toon.